There are approximately 8,000 mutual fund strategies, 7,000 separate account strategies and nearly 2,000 ETFs. Unfortunately, a good portion of these strategies have and will continue to produce disappointing returns for investors. With many products and few good, long-term options, it’s important for investors to “do their homework” prior to making an investment. But, before doing more detailed work, the list of investment options will need to be trimmed. One way to trim the list is to utilize broad quantitative screens in third-party databases. These screens may include items such as manager tenure, fees or portfolio turnover. However, even after applying these broad screens, a significant number of products will remain. Unfortunately, the screens do not provide much in the way of useful information on managers either (particularly qualitative information, which we spend a disproportionate amount of time on). So, another way research teams may search through investment opportunities is by meeting with portfolio managers and representatives from asset management firms on a regular basis.

In my time as an investment analyst I have met with hundreds of firms. On the surface, every firm tends to have a compelling story and an attractive way to present their investment team and demonstrate their skill as a manager. However, as we all know, what lies beneath the surface can be the most telling. So, it’s important that an analyst determines what’s behind a manager’s seemingly attractive results, what differentiates them relative to competitors and most importantly, if they have a clearly defined investment process that has been (and will continue) to consistently deliver superior risk-adjusted returns to investors.

At this point, you may be thinking, “That’s great you meet with managers, but what’s next? What follow-up analysis do you conduct?” For the managers that grab our attention, we begin the next phase, what I believe is the fun part, digging into the background of the firm, investment team, investment process and analyze portfolio characteristics, historical holdings and performance, among many other factors. Rather than skip around to discuss other factors we analyze, I’ll build on the ideas I described in the last blog I wrote, “Danger of Point-in-Time Analysis,” which discusses the drawbacks of point-in-time performance analysis and the merits of using alternate methods to analyze results. Now I’ll take the discussion one step further to answer the next question, “Once you have the performance broken down the way you would like to see it, then what?”

The most effective way I can answer this question is to walk-through a real life example of a manager that “pitched” us their strategy during a meeting. For obvious reasons, I will not divulge the name of the manager or the strategy, but I will provide some general background, which includes:

· The manager and their team were hired by an asset management firm to run small- and small-/mid-cap value-oriented equity strategies.

· One of the key selling points the individuals focused on in our meeting was the manager’s excellent track record while managing a similar strategy at a previous firm.

· The manager’s track record on the “strong performing” strategy stretched from March, 2004 through September, 2008.

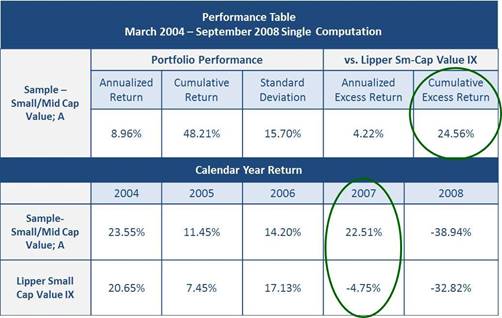

Because the manager made a compelling case for investment (and because the strategy is in a capacity-constrained asset class), we took a closer look. One of the first items we looked at was the manager’s track record at their previous firm. As you can see in the table below, the manager had an excellent track record for the entire timeframe they managed the strategy. For instance, the manager’s strategy delivered a cumulative excess return of +24.56% over their Lipper peer group benchmark (circled in green). Most notably, the strategy outperformed their peers by a significant margin in 2007 (circled in green).

With the above data in hand, the next question becomes, “What happened in 2007?” As a starting point, we broke out performance of the strategy relative to their Lipper peer group benchmark into monthly periods. The monthly relative performance figures revealed most of the outperformance was over the middle eight months of 2007 (highlighted in green).